Deepfakes MasterClass: Thoughtworks Arts and Baltan Labs

Thoughtworks Arts and Baltan Laboratories organized the two-week-long online masterclass: Deepfakes - Synthetic Media And Synthesis, led by director Ellen Pearlman and ThoughtWorker Julien Deswaef and facilitated by Leif Czakai of Baltan Labs.

Participants from varying backgrounds were put into three interdisciplinary teams to collaborate remotely on prototyping a synthetic media project. During the intensive two weeks, each team explored the wide possibilities of artificially generated media and investigated artistic ways in which to innovatively apply their findings.

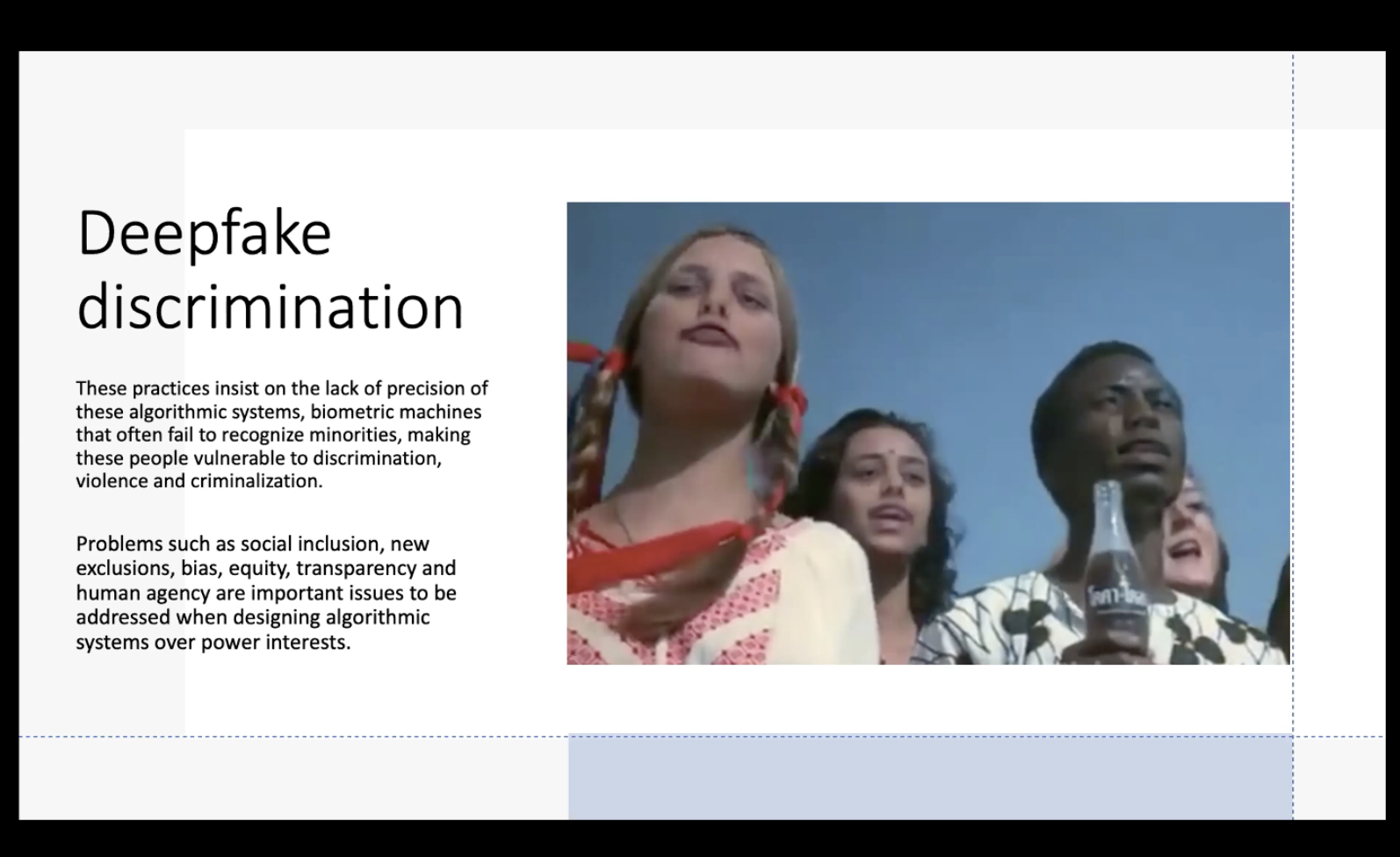

The projects were driven by questions about the societal impacts of this rapidly growing field. Teams researched deepfake discrimination found in the algorithms and data sets that generate synthetic visuals. They also explored how synthetic media could influence the masses in subliminal ways and learned that by deconstructing existing deepfake models, it is possible to highlight inherent biases.

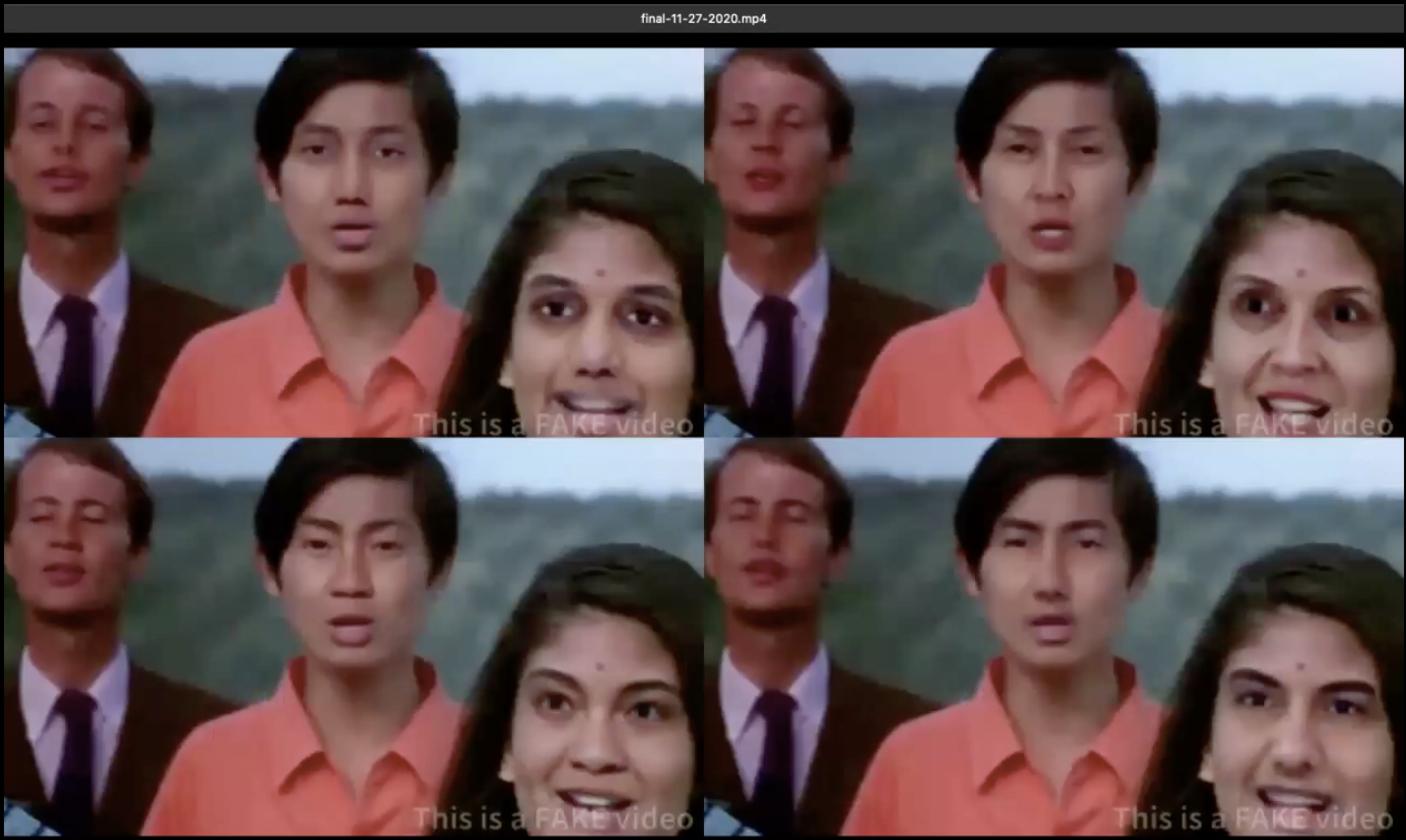

Team 1 mapped their faces and voices to a restructured famous 1971 Coca-Cola advertisement, teaching the whole world how to sing. They discovered how complicated the process is to convincingly match their faces onto actors. Questions were raised about the implications of this technology, and if there are any accountability measures or systems in place to monitor how deepfake information is delivered.

Team 2 used synthetic media to frame how ‘information bubbles’, or ‘echo chambers’, preselect information into our social media feeds - grabbing certain content that can inflame and reinforce the user’s biases. Their project explored existing models and algorithms, making a comparison between different infospheres by constructing two identical twin personas who belonged to different types of community feeds. Their aim was to learn how information from fictional personas can be compiled, and to what extent that derived content influences a chain of responses generated from user interaction. The implications of this inquiry on information bubbles became clear with the attack on the U.S. Capital building on January 6, 2021.

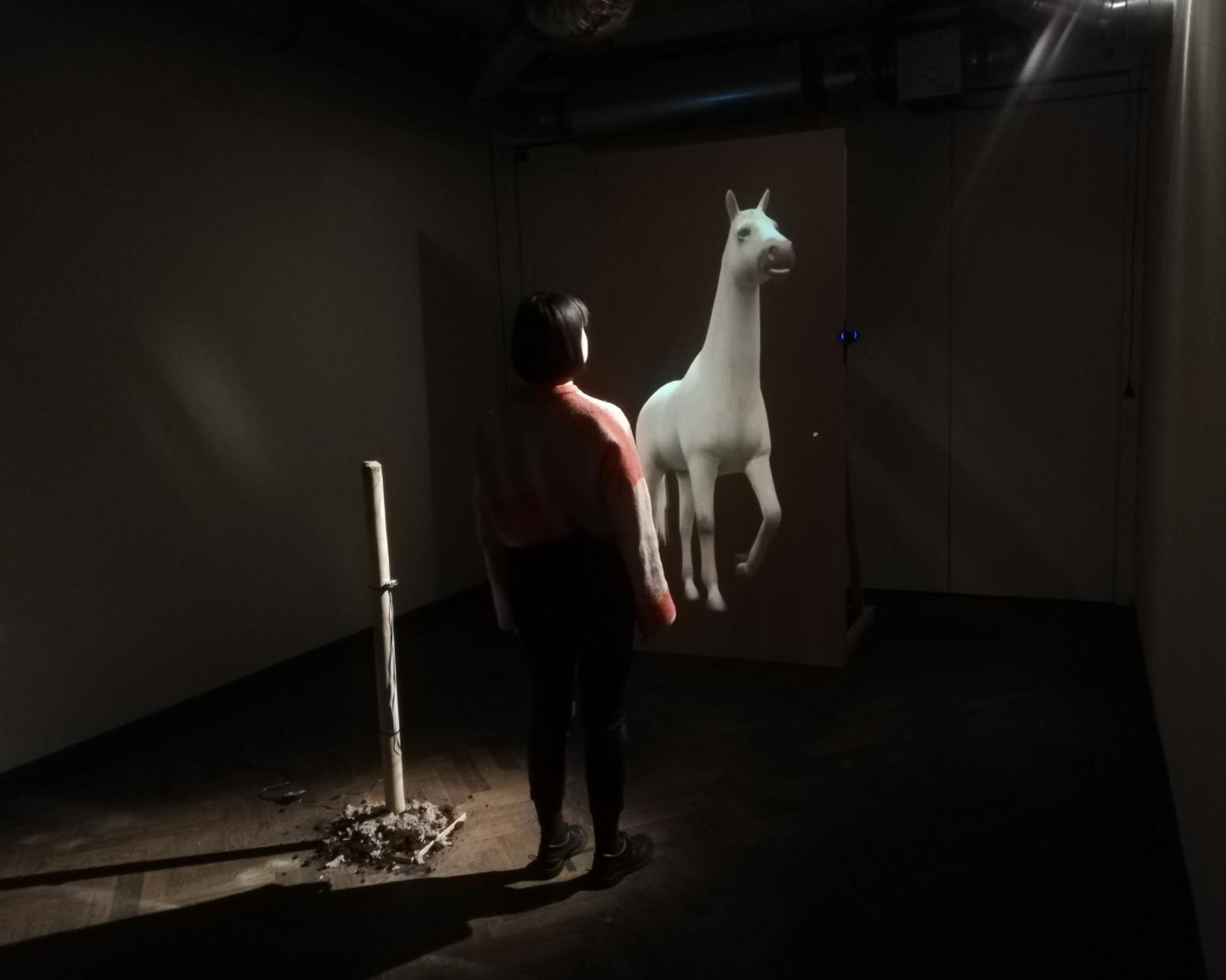

Team 3 compared the mechanism found in smart and social technology by depicting a speculative story concerning computer and animal intelligence. Their project resulted in an interactive installation that was tested and presented in an exhibition space at Baltan Labs. The work encouraged viewers to consider the question: “What is the difference between a smart horse trying to read someone’s mind and a computer trying to do the same thing? Does this mean that computers will magically be able to outsmart us on all levels?”

The masterclass concluded in final team presentations of their synthetic media prototypes, sharing what they learned to develop future projects on a larger scale.

Keep on top of Thoughtworks Arts updates and articles: